Explain The Idea

Word vector is widely used in text-related AI processes. Its center idea is to maximize the following likelihood function.

$$\begin{equation} \label{eq:originalL}

L(\theta) = \prod_{t=1}^T \prod_{\substack{-m \le j \le m \\ j \ne 0}} p(w_{t+j} | w_t ; \theta)

\end{equation}$$

Terrifying! Right? Let me explain it.

Imagine as if you have a long text which is a sequence of words. At any time, consider a word $w_t$ as center of a window in the long word sequence. What is the probability of words surrounding $w_t$? Rephrase it, what is the probability of surrounding words appearing in a window, given $w_t$ is the center? Solution to this question can be considered in the following way.

For any one specific occurrence of $w_t$, its surrounding is $\{ w_{-m}, w_{-m+1}, …, w_{t-1}, w_{t+1}, …, w_{m-1}, w_{m} \}$. For each of the $w_{t+j}$, where $-m \le j \le m \land j \ne 0$, the probability of $w_{t+j}$ given $w_t$ is just denoted as $p(w_{t+j} | w_t ; \theta)$. The $\theta$ there is the parameter to be trained, which guides how each probability is calculated while the formulae are fixed. In fact, you can think of $\theta$ as a store. When computing $p(w_{t+j} | w_t ; \theta)$, $p$ takes the part of $w_{t+j}$ out of $\theta$, takes the part of $w_t$ out of $\theta$, then do the computation using a fixed formula. Now multiply all $p(w_{t+j} | w_t ; \theta)$ for $w_t$’s surrounding words, we get a probability estimate of one surrounding given $w_t$. This is the $$ \prod_{\substack{-m \le j \le m \\ j \ne 0}} p(w_{t+j} | w_t ; \theta) $$ part.

Now, I have got a probability estimate for one position in the long sequence of words. We use the same formula for all positions, and multiply the probabilities to get the probability estimate of all windows appearing collectively, given the long word sequence. And the whole probability estimate is $L(\theta)$. Note: the word sequence is the universe, therefore it is not explicitly included in the formula.

$L(\theta)$ is clearly between $0$ and $1$, by definition. And the word sequence appears in reality. Therefore, ideally, $L(\theta)$ should be $1$. So, what we need to do is to maximize $L(\theta)$ to make it as close to $1$ as possible.

In real computation, all $p(w_{t+j} | w_t ; \theta)$’s are between $0$ and $1$. Their products will be extremely close to 0. This will cause underflow and loss of precision. Hence, we use $J(\theta)=-\frac{1}{T}log(L(\theta))$ instead. The minus sign changes maximization into minimization, as people are more comfortable with minimization and more software packages are tuned to minimization. The $\frac{1}{T}$ helps to constrain the sum in the magnitude of one log-probability value, namely the sum does not grow indefinitely large in magnitude as $T$ grows. $J(\theta)$ expands to $$

J(\theta)=-\frac{1}{T} \sum_{t=1}^T \sum_{\substack{-m \le j \le m \\ j \ne 0}} log(p(w_{t+j} | w_t ; \theta))

$$

Before working further, we must determine the formula of $p(w_{t+j} | w_t ; \theta)$. Any formula that constitutes a probability density function will do. But softmax is a well acccepted one. So, I will use softmax. This is also the function used in the original algorithm. The original algorithm uses two vectors for each word. $u_c$ when the word considered is the center word, $u_o$ otherwise. Hence, $$ p(w_o | w_c ; \theta) = \frac{exp({u_o}^\top u_c)}{\sum_{q \in V}exp({u_q}^\top u_c)} $$, where $u_o$, $u_c$ and $u_q$ are taken out of $\theta$, and $V$ is the vocabulary, namely the set of all words. In this setting, each word will end up with two vectors. People will have to merge the two vectors for each word, because eventually we want one vector for each word. Here I think, why not just use one vector $u$ for each word? I am going to try it. The formula looks identical: $$ p(w_a | w_b ; \theta) = \frac{exp({u_a}^\top u_b)}{\sum_{d \in V}exp({u_d}^\top u_b)} $$, just it no longer distinguishes center from surrounding. A side effect of this change is that size of $\theta$ is halved.

The full $J(\theta)$ is $$\begin{align}

J(\theta)

&=-\frac{1}{T} \sum_{t=1}^T \sum_{\substack{-m \le j \le m \\ j \ne 0}} log(p(w_{t+j} | w_t ; \theta)) \\

&=-\frac{1}{T} \sum_{t=1}^T \sum_{\substack{-m \le j \le m \\ j \ne 0}} log \left(\frac{exp({u_{t+j}}^\top u_t)}{\sum_{d \in V}exp({u_d}^\top u_t)} \right) \label{eq:originalJ}

\end{align}$$.

Derive The Formula for Minimization

To minimize $J(\theta)$ over $\theta$, I compute derivative of $J(\theta)$.

$$ \begin{align}

& \frac{\partial}{\partial \theta} log(\frac{exp({u_{t+j}}^\top u_t)}{\sum_{d \in V}exp({u_d}^\top u_t)}) \\

&= \frac{\partial}{\partial \theta} \left[ log(exp({u_{t+j}}^\top u_t)) – log({\sum_{d \in V}exp({u_d}^\top u_t)}) \right] \\

&= \frac{\partial}{\partial \theta} log(exp({u_{t+j}}^\top u_t)) – \frac{\partial}{\partial \theta} log({\sum_{d \in V}exp({u_d}^\top u_t)}) \\

&= \left[ \frac{\partial}{\partial \theta} {u_{t+j}}^\top u_t \right] – \left[ \frac{1}{\sum_{f \in V}exp({u_f}^\top u_t)} {\sum_{d \in V} \frac{\partial}{\partial \theta} exp({u_d}^\top u_t)} \right] \\

&= \left[ \frac{\partial}{\partial \theta} {u_{t+j}}^\top u_t \right] – \left[ \frac{1}{\sum_{f \in V}exp({u_f}^\top u_t)} {\sum_{d \in V} exp({u_d}^\top u_t) \frac{\partial}{\partial \theta} {u_d}^\top u_t} \right] \\

&=\left[ \frac{\partial}{\partial \theta} {u_{t+j}}^\top u_t \right] – \left[ \frac{1}{\sum_{f \in V}exp({u_f}^\top u_t)} \sum_{d \in V} exp({u_d}^\top u_t) {u_d} \right] \\

&=\left[ \frac{\partial}{\partial \theta} {u_{t+j}}^\top u_t \right] – \left[ \frac{\sum_{d \in V} exp({u_d}^\top u_t) {u_d}}{\sum_{f \in V}exp({u_f}^\top u_t)} \right] \\

&=\left[ \frac{\partial}{\partial \theta} {u_{t+j}}^\top u_t \right] – \left[ \sum_{d \in V} { \frac{exp({u_d}^\top u_t) }{\sum_{f \in V}exp({u_f}^\top u_t)} u_d } \right] \\

&=\left[ \frac{\partial}{\partial \theta} {u_{t+j}}^\top u_t \right] – \left[ \sum_{d \in V} { p(w_d | w_t ; \theta) u_d } \right] \\

\end{align}

$$

As the computational process will be iterative, we can treat $u_{t+j}$ as constant parameters to $u_t$. So the last step becomes:

$$ u_{t+j} – \left[ \sum_{d \in V} { p(w_d | w_t ; \theta) u_d } \right] $$

Put them together:

$$ \begin{align} \label{eq:derivativeJ}

\frac{\partial J}{\partial \theta} = -\frac{1}{T} \sum_{t=1}^T \sum_{\substack{-m \le j \le m \\ j \ne 0}} \left[ u_{t+j} – \sum_{d \in V} { p(w_d | w_t ; \theta) u_d } \right]

\end{align} $$

So, we can now use $\frac{\partial J}{\partial \theta}$ to update $\theta$ so that $\frac{\partial J}{\partial \theta}$ will approach $0$. But how? Let me explain intuitively.

You can think of $\frac{\partial J}{\partial \theta}$ as a slope. A slope is a vector pointing to the direction of changing function value, assuming the function is continuous and convex in a vicinity that contains both $\theta$ and the minimal point of $J(\theta)$. So, if the direction of $\frac{\partial J}{\partial \theta}$ coincides the growing direction of $J(\theta)$, a portion of $\frac{\partial J}{\partial \theta}$ need be subtracted from $\theta$. If the direction of $\frac{\partial J}{\partial \theta}$ contradicts the growing direction of $J(\theta)$, a portion of negated $\frac{\partial J}{\partial \theta}$ need be added to $\theta$, which amounts to also subtracting a portion of $\frac{\partial J}{\partial \theta}$ from $\theta$. Therefore, subtracting a portion of $\frac{\partial J}{\partial \theta}$ from $\theta$ is the method for updating $\theta$ in the minimization process. Repeatedly updating $\theta$ in this way until no changes in $\theta$ is perceivable. This is the Gradient Descent Algorithm. If the portion is not fixed, but changing due to some intuitive, then it is the Adaptive Gradient Descent Algorithm. In formula, it is:

$$ \theta_{k+1} \gets \theta_{k} – \alpha \frac{\partial J}{\partial \theta} $$

Computational Considerations

In the process of computing $\frac{\partial J}{\partial \theta}$, we will need all ${u_a}^\top u_b$’s for all $a,b \in V$. As $|V|$ can be very large. The memory that are required for storing all ${u_a}^\top u_b$’s will be formidably large. So, storing them is better avoided.

Look closely at structure of $$p(w_d | w_t ; \theta) = \frac{exp({u_d}^\top u_t)}{\sum_{f \in V}exp({u_f}^\top u_t)}$$. It needs dot products of $u_t$ with all other vectors $u_f$ for all $f \in V$. And $t$ is just some $f$. Observe structure of $J(\theta)$ again: $$

J(\theta)=-\frac{1}{T} \sum_{t=1}^T \sum_{\substack{-m \le j \le m \\ j \ne 0}} log(p(w_{t+j} | w_t ; \theta))

$$. We can find that in the inner sum $$

\sum_{\substack{-m \le j \le m \\ j \ne 0}}^T log(p(w_{t+j} | w_t ; \theta))

$$, denominator of $p$ is repeatedly computed, and it does not depend on $j$. Hence denominator of $p$ can be calculated beforehand and used repeatedly.

However, you may notice that $p(w_{t+j} | w_t ; \theta)$ still asks for too much computation as it must iterate over the whole vocabulary.

Changing Probability Evaluation Method into An Intuitive One

Let’s focus on $$\begin{align}

log(p(w_{t+j} | w_t ; \theta))

&= log \left(\frac{exp({u_{t+j}}^\top u_t)}{\sum_{d \in V}exp({u_d}^\top u_t)} \right) \\

& \notag \\

&= log(exp({u_{t+j}}^\top u_t)) \quad – \quad log(\sum_{f \in V}exp({u_f}^\top u_t)) \\

&= \underbrace{{u_{t+j}}^\top u_t}_\text{part1} \quad – \quad \underbrace{log(\sum_{f \in V}exp({u_f}^\top u_t))}_\text{part2}

\end{align}$$.

Part1 is a linear part, it measures similarity of two words in a vector space. Part2 removes some ground similarity that $u_t$ is to all words on average. The heavy computation burden comes from part2. So, we need to come up with some efficient, yet possibly approximate, alternatives.

Look deeper into part2. suppose the average of all the exponential is $E$. Then part2 is $log(\sum_{f \in V}E)=log(|V| \cdot E)$. This means that part2 can be treated as a kind of average over the set of ${u_f}^\top u_t$. It has the property that large $exp({u_f}^\top u_t)$’s will dominate the value of the logged sum. Only when all the $exp({u_f}^\top u_t)$’s are close to $0$, will the part2 become negative. In fact, becoming negative is not definitely necessary, because this negativity only helps when all the negative words are dissimilar to the center word. This achieves the similar effect of only punishing similarity between the negative words with the center word. With this observation, part2 becomes $$

\sum_{f \in V}\Lambda({u_f}^\top u_t)

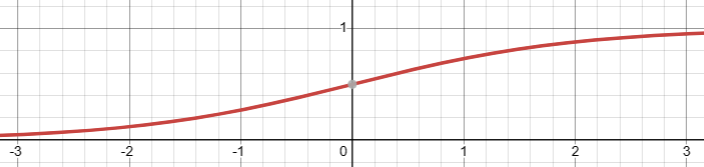

$$, where $\Lambda$ is the rectified linear unit function $

ReLU(x)=

\begin{cases}

x & \text{if } x \ge 0 \\

0 & \text{if } x < 0

\end{cases}

$.

Put them back into the formula of $J$: $$\begin{equation} \label{eq:alternativeL1}

J(\theta)=-\frac{1}{T} \sum_{t=1}^T \sum_{\substack{-m \le j \le m \\ j \ne 0}} \left[ \underbrace{{u_{t+j}}^\top u_t}_\text{part1} \; – \;

\underbrace{\sum_{f \in V}\Lambda({u_f}^\top u_t)}_\text{part2} \right]

\end{equation}$$. This is a variation of Skip-Gram with Negative Sampling.

The first summation $$

\sum_{t=1}^T

$$ can still be too big. One can update $\theta$ partially by selecting a sample from the indices $\{w_1,…,w_T\}$. This entitles the algorithm Stochastic: $$

J(\theta)=-\frac{1}{|S|} \sum_{t \in S} \sum_{\substack{-m \le j \le m \\ j \ne 0}} \left[ {u_{t+j}}^\top u_t \; – \;

\sum_{f \in K}\Lambda({u_f}^\top u_t) \right]

$$, where $S$ is a sample of $\{w_1,…,w_T\}$.

Theoretically, if $|S|$ is fixed, $\frac{1}{|S|}$ can be omitted. And $J$ becomes $$\begin{equation} \label{eq:alternativeL2}

J(\theta)=-\sum_{t \in S} \sum_{\substack{-m \le j \le m \\ j \ne 0}} \left[ {u_{t+j}}^\top u_t \; – \;

\sum_{f \in K} \Lambda({u_f}^\top u_t) \right]

\end{equation}$$.

However, removing $\frac{1}{|S|}$ may not be a very good idea. The reason is that $\frac{1}{|S|}$ scales down the derivative, so that it does not grow too big as the number of involved terms increase. Without $\frac{1}{|S|}$, it can easy cause floating-point number overflow.

There are two kinds of samples $S$ and $K$. They are sampled using different strategies. $S$ needs to cover the whole set of word positions, hence sampling of $S$ can be a permutation of $\{w_1,…,w_T\}$ cut into segments. $K$ needs to avoid words in current window, hence it is almost a tendency to cover less frequent words. Two methods can be used. One is to sample the vocabulary uniformly. Due to Zipf’s law, you will most probably always hit less frequent words. The other is to sample the uniform distribution while taking a bias towards less frequent words. Which one is better? Have a try!

Now, consider any partial derivative $\frac{\partial J}{\partial u_i}$ on a single variable. Consider an arbitrary pair $(t, j) \in S \times \{-m \le j \le +m \wedge j \ne 0 \}$.

$\frac{\partial}{\partial u_i}{u_{t+j}}^\top u_t$ can be nonzero only if either $w_{t+j}=w_i$ or $w_t=w_i$.

– When $w_{t+j}=w_i$, $u_t$ is an additive term in the expansion of $\frac{\partial}{\partial u_i}{u_{t+j}}^\top u_t$.

– When $w_t=w_i$, $u_{t+j}$ is an additive term in the expansion of $\frac{\partial}{\partial u_i}{u_{t+j}}^\top u_t$.

Then, consider an arbitrary pair $(t,f) \in S \times K$.

$-\frac{\partial}{\partial u_i}{u_f}^\top u_t$ can be nonzero only if $w_i = w_t \vee w_i = w_f$.

– When $w_i=w_t$, $-u_f$ is an additive term in the expansion of $\frac{\partial}{\partial u_i}{u_f}^\top u_t$.

– When $w_i=w_f$, $-u_t$ is an additive term in the expansion of $\frac{\partial}{\partial u_i}{u_f}^\top u_t$.

The above conditions are not exclusive, meaning, if a $w_i$ satisfies multiple conditions, multiple additive terms will be involved. Because $j$ can have $2m$ values, the expansion of $-\frac{\partial}{\partial u_i}{u_f}^\top u_t$ will be repeated for $2m$ times.

To summarize, define $

\Lambda'(x)=

\begin{cases}

1 & \text{if } x>0 \\

0 & \text{if } x \le 0

\end{cases}

$ and $

I(a,b)=\begin{cases}

1 & \text{if }a=b \\

0 & \text{if } a \ne b

\end{cases}

$, then, $$\begin{align}

&\frac{\partial}{\partial u_i}J(\theta) \\

&=-\frac{1}{|S|}\sum_{t \in S} \sum_{\substack{-m \le j \le m \\ j \ne 0}} \left[

\frac{\partial}{\partial u_i}{u_{t+j}}^\top u_t \; – \;

\sum_{f \in K} \frac{\partial}{\partial u_i}\Lambda({u_f}^\top u_t) \right] \\

&=- \left\{

\sum_{t \in S} \sum_{\substack{-m \le j \le m \\ j \ne 0}} \frac{1}{|S|}\left[

I(w_{t+j}, w_i) u_t + I(w_t, w_i) u_{t+j}

\right]

\; – \;

2m \sum_{t \in S} \sum_{f \in K} \frac{1}{|S|}\Lambda'({u_f}^\top u_t) \left[

I(w_i, w_t)u_f+I(w_i, w_f)u_t

\right]

\right\} \\

&=-

\sum_{t \in S} \sum_{\substack{-m \le j \le m \\ j \ne 0}} \frac{1}{|S|} \left[

I(w_{t+j}, w_i) u_t + I(w_t, w_i) u_{t+j}

\right]

\; + \;

2m \sum_{t \in S} \sum_{f \in K} \frac{1}{|S|} \Lambda'({u_f}^\top u_t) \left[

I(w_i, w_t)u_f+I(w_i, w_f)u_t

\right] \label{eq:derivativeJ2} \\

\end{align}$$

What Could Be the Problem?

It turns out that still some problems remain challenging.

Non-Convexity

First, let’s look back at equation \eqref{eq:originalJ} or \eqref{eq:alternativeL2}. Think of each $p(\bullet)$s as $x_i$. On any 2-D circle formed by any of $x_i$ and $x_j$, $x_i x_j$ reaches its maximum when $x_i = x_j$, and minimum when $x_i=-x_j$. So do they when applied $exp(\bullet)$. So, $\vec{0}$ is indeed a saddle point. This means that I cannot set initial values of $\theta$ to $\vec{0}$. And this raises a risk in training that $\theta$ might converge to $\vec{0}$. At least, if this happens, I must retrain the model.

Linear Dependency

Look close at \eqref{eq:derivativeJ} and \eqref{eq:derivativeJ2}, you can find that the derivative can only update $\theta$ in multiple of $u_t$ and $u_f$. So, if the $u_t$ and $u_f$ are linearly-dependent to begin with, the resulting $\theta$ can only consist of linearly-dependent vectors. So, I must initialize $\theta$ so that all the $u$’s are likely to be linear-independent, or at least find a way to introduce some disturbance to break the linear-dependency.

Magnitude

$J(\theta)$ is affected by dot products of two word vectors. Positive dot products tend to be greater in positive direction, negative dot products tend to be greater in negative direction. After the dot products growing big, they almost do not affect value of $\sigma$, thus almost do not affect value of $J(\theta)$. This is bad. Because, if a dot product is two big, it will definitely cause a float-pointing overflow, which will make further computation meaningless. So, I will need a mechanism to restrict magnitudes of the word vectors. One way to do it is to add a squared $L_2$ norm. This turns \eqref{eq:alternativeL2} into $$\begin{equation}

J(\theta)=-\sum_{t \in S} \sum_{\substack{-m \le j \le m \\ j \ne 0}} \left[ \frac{1}{|S|} {u_{t+j}}^\top u_t \; – \;

\sum_{f \in K} \frac{1}{|S|} \Lambda({u_f}^\top u_t) \right] + \frac{D}{2} \theta^\top \theta

\end{equation}$$.

Here, $D$ is a constant for tuning the balance between model fidelity and vector magnitude. Then, \eqref{eq:derivativeJ2} becomes $$\begin{align}

\frac{\partial J(\theta)}{\partial u_i}=

-\underbrace{

\sum_{t \in S} \sum_{\substack{-m \le j \le m \\ \ j \ne 0}} \frac{1}{|S|} \left[

I(w_{t+j}, w_i) u_t + I(w_t, w_i) u_{t+j}

\right]}_\text{Term1}

\; + \;

\underbrace{

2m \sum_{t \in S} \sum_{f \in K} \frac{1}{|S|} \Lambda'({u_f}^\top u_t) \left[

I(w_i, w_t)u_f+I(w_i, w_f)u_t

\right]

}_\text{Term2}

\; + \;

\underbrace{ D u_i }_\text{Term3}

\end{align}$$

.

In practice, a correct $D$ may be hard to estimate in advance. Another method could be scaling magnitude of the whole collection of word vectors to some constant, between each sample.

Weighting

In the above formulae, the two sequences $\{a,b,c,d,e\}$ and $\{d,a,c,e,b\}$ are indifferent. This is not good. Because, clearly, “Let me give you books” and “Let me give books you” clearly means different action direction. And the latter one is perhaps very rare. One method to alleviate this problem is weight the dot products in $\text{Term1}$ according to their distance from the center words. So: $$\begin{equation}

J(\theta)=-\sum_{t \in S} \sum_{\substack{-m \le j \le m \\ j \ne 0}} \left[ \mu (1-\tau^{m-|j|+1}) {u_{t+j}}^\top u_t \; – \;

\sum_{f \in K}\Lambda({u_f}^\top u_t) \right] + \frac{D}{2} \theta^\top \theta

\end{equation}$$,

where $0 < \tau \le 1$ is some weight decay constant, and $\mu$ is a constant scaling factor. The reason for $\mu$ is that $\tau^{|j|-1}$ is at most $1$, this weight is likely to underweight $\text{Term1}$, so $\mu$ is used to scale it back.

Then $$\begin{align}

\frac{\partial J(\theta)}{\partial u_i}=

-\underbrace{

\sum_{t \in S} \sum_{\substack{-m \le j \le m \\ \ j \ne 0}} \mu (1-\tau^{m-|j|+1}) \frac{1}{|S|} \left[

I(w_{t+j}, w_i) u_t + I(w_t, w_i) u_{t+j}

\right]}_\text{Term1′}

\; + \;

\underbrace{

2m \sum_{t \in S} \sum_{f \in K} \frac{1}{|S|} \Lambda'({u_f}^\top u_t) \left[

I(w_i, w_t)u_f+I(w_i, w_f)u_t

\right]

}_\text{Term2′}

\; + \;

\underbrace{ D u_i }_\text{Term3′}

\end{align}$$

One way to calculate a $\mu$ is to set $$

\mu=\frac{m}{\sum_{j=1}^m (1-\tau^{m-|j|+1})}

$$.

Stability

To avoid a random imperfect initialization to determine the result prematurely, I added some random disturbance during the training. And because each time only a sample is seen, results from each sample are merged with exponential moving average.